Facebook Removed Nearly 40% More Terrorist Content in Second Quarter -- Update

11 Agosto 2020 - 6:48PM

Dow Jones News

By Rachael Levy

Facebook Inc. removed nearly 40% more content that it

categorized as terrorism in the second quarter compared with the

first three months of the year, the company said.

Facebook removed about 8.7 million pieces of such content --

which includes, according to the company's definition, nonstate

actors that engage in or advocate for violence to achieve

political, religious or ideological aims -- in the second quarter

of this year, up from 6.3 million in the first quarter.

That increase "was largely driven by improvements in our

proactive detection technology to identify content that violates

[Facebook's] policy," a company spokeswoman said.

For "organized hate" groups, a separate category, the company

said it took down four million pieces of content, down from 4.7

million in the first quarter.

Facebook's Instagram platform removed about 388,800 pieces of

terrorist content, down from 440,600 in the first quarter, but it

removed more organized hate content in the second quarter --

266,000 pieces versus 175,100 in the previous quarter.

White supremacist groups have been a focus for the social-media

giant. Since October of last year, the company said it completed 14

network takedowns to remove 23 organizations in violation of

Facebook's policies. The majority of those takedowns, nine of the

14, targeted "hate and/or white supremacist groups," including the

KKK, the Proud Boys, Blood & Honour and Atomwaffen, the company

said.

Facebook has put more than 250 white supremacist groups on its

list of dangerous organizations, company officials earlier said,

putting them alongside jihadist organizations such as al Qaeda.

The tech giant also recently banned a large segment of the

boogaloo movement from its platform. Adherents of the

loosely-organized boogaloos include white supremacists.

Facebook removed the boogaloo accounts after a targeted

investigation by human analysts, officials said. The company has

increasingly turned to humans to assess networks that actively try

to avoid its automated content-monitoring tools.

Facebook's latest figures come after the company said in June it

took down posts and ads for President Trump's re-election campaign

because they violated the company's policy against "organized

hate." The campaign ads claimed that "Dangerous MOBS of far-left

groups" are causing mayhem and destroying cities and called on

supporters to back President Trump's battle against antifa, a

loosely organized activist movement that the White House has blamed

for unrest.

The ads featured a large, red downward-pointing triangle. The

inverted red triangle is a marking Nazis used to designate

political prisoners in concentration camps, according to the

Anti-Defamation League and other groups. The Trump campaign said

that the triangle is a common antifa symbol, though some experts on

extremist groups have disputed that.

Other social-media platforms have also faced pressure to remove

information tied to groups classified as terrorist organizations.

Last year, Twitter Inc. suspended accounts linked to Palestinian

group Hamas and Iran-backed militant group Hezbollah. U.S.

lawmakers had criticized Twitter for allowing those entities to

remain active on the platform even though the State Department

designated both as terrorist organizations.

Social-media companies have also faced tensions over how to

handle misleading posts. Twitter earlier this year labeled tweets

by President Trump about mail-in ballots as misinformation,

highlighting a widening divide among big tech platforms on how they

handle political speech as the U.S. presidential election

approaches.

Write to Rachael Levy at rachael.levy@wsj.com

(END) Dow Jones Newswires

August 11, 2020 12:33 ET (16:33 GMT)

Copyright (c) 2020 Dow Jones & Company, Inc.

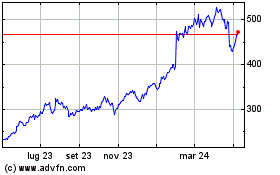

Grafico Azioni Meta Platforms (NASDAQ:META)

Storico

Da Mar 2024 a Apr 2024

Grafico Azioni Meta Platforms (NASDAQ:META)

Storico

Da Apr 2023 a Apr 2024