Facebook Doesn't Want to Censor Political Ads Over Accuracy, Executive Says

22 Ottobre 2019 - 7:30AM

Dow Jones News

By Georgia Wells

LAGUNA BEACH, CALIF.-- Facebook Inc. doesn't want to censor paid

content from politicians based on accuracy but it would draw the

line at political ads if they encourage violence, Chief Technology

Officer Mike Schroepfer said Monday.

Facebook wants to moderate content as little as possible

because, as a private company, it doesn't want to make decisions

about speech, Mr. Schroepfer said, echoing recent comments from

Facebook CEO Mark Zuckerberg. But an ad posted by a politician that

might inspire imminent harm to another person would be barred from

the platform, Mr. Schroepfer said at the WSJ Tech Live conference

here.

"It's pretty high up in the policy ranking of the things we

don't want on the platform," he said. "If this speech is going to

cause...harm, I think most people think that's a good place to

start intervening."

Facebook angered Democrats earlier this month with its refusal

to remove an ad from President Donald Trump's re-election campaign

that made an unsubstantiated claim about former Vice President Joe

Biden's role in the ouster of a Ukranian prosecutor.

Facebook denied the Biden campaign's request to remove the ad,

which the campaign said was false. That decision was made according

to a policy Facebook announced in September that it won't

fact-check speech or advertising by politicians.

Political advertising has caused a disproportionate headache for

Facebook compared with the amount of revenue it provides the

company. "We have had heated debates about whether political ads

are worth it," he said. "There's an argument that it's getting

abused too much, and there's an argument about access."

Elizabeth Warren in early October accused Facebook of

prioritizing profit over protecting democracy.

Mr. Schroepfer said he believes political advertising is good

because it could allow potential candidates who can't afford

broadcast ads to still promote themselves.

Facebook is still building out other aspects of its advertising

policy, including how the company should treat content manipulated

with artificial intelligence or other technology, known as

"deepfakes," Mr. Schroepfer said. "This is a part of our policy

that is still in discussion."

If a video has been edited it may be eligible for fact-checking,

and potentially labeled as misinformation. "There's not a policy

yet for how do you treat it just because it is an AI-created

thing," he said.

Twitter Inc. said earlier at the conference that it is planning

a new policy for deepfake content.

Meanwhile, Facebook is also working to better train its

algorithms to detect violent videos, Mr. Schroepfer said. The

company hopes to prevent a repeat of the March tragedy when a video

of the massacre in Christchurch, New Zealand remained on Facebook

for an hour before the company took it down.

"AI systems need examples to understand what they're looking

for. We literally didn't have a lot of first-person examples like

that," he said.

Since Christchurch, Facebook has partnered with the London

Metropolitan Police Service to get more data related to

first-person attacks. Facebook provided the police with cameras,

and the police are running training simulations of these types of

attacks.

"We're going to feed that straight into our systems to detect

this," he said.

Write to Georgia Wells at Georgia.Wells@wsj.com

(END) Dow Jones Newswires

October 22, 2019 01:15 ET (05:15 GMT)

Copyright (c) 2019 Dow Jones & Company, Inc.

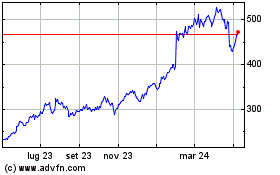

Grafico Azioni Meta Platforms (NASDAQ:META)

Storico

Da Mar 2024 a Apr 2024

Grafico Azioni Meta Platforms (NASDAQ:META)

Storico

Da Apr 2023 a Apr 2024